About Me

Hi! I am a fourth-year (final-year) Ph.D. student in MMLab at The Chinese University of Hong Kong, advised by Prof. Dahua Lin. Prior to this, I earned my Bachelor’s degree in Computer Science and Technology (2022) from the Chu Kochen Honors College (Pursuit Science Class), Zhejiang University, where I was supervised by Prof. Guofeng Zhang in the State Key Laboratory of CAD&CG. I have research experience at several leading research institutions, including Meta FAIR, Shanghai AI Lab, and SenseTime Research. Some of the awards I have received include the ECCV 2024 Best Paper Finalist, the Hong Kong PhD Fellowship, the CUHK Vice-Chancellor’s PhD Scholarship, and the Undergraduate National Scholarship.

My long-term goal is to develop an intellectual model capable of universally perceiving and reasoning about our 3D physical world, primarily through visual information and various multi-modal data, which can be deployed on robots or wearable devices (like AI/AR glasses). This pursuit aims to augment human intelligence and to benefit society at large. Currently, my exploration focuses on equipping LLMs and VLMs with scalable spatial intelligence. More specifically, my work includes developing foundation models for spatial intelligence, establishing stronger benchmarks to evaluate these models’ spatial capabilities, and building practical applications that leverage these models to solve real-world problems.

If you share my interests, have articles to recommend that are helpful, or have any queries, please do not hesitate to contact me. :)

I am on the job market (expected to graduate in 2026).

News

- [Dec. 2025] We release MMSI-Video-Bench, a benchmark for video-based spatial intelligence! 📊

- [Nov. 2025] We release G2VLM, a geometry grounded VLM with unified 3D reconstruction and spatial reasoning!

- [Oct. 2025] We released ChangingGrounding, a novel task for 3D visual grounding in changing scenes!

- [Sep. 2025] Our OST-Bench has been accepted to NeurIPS 2025! 🎉

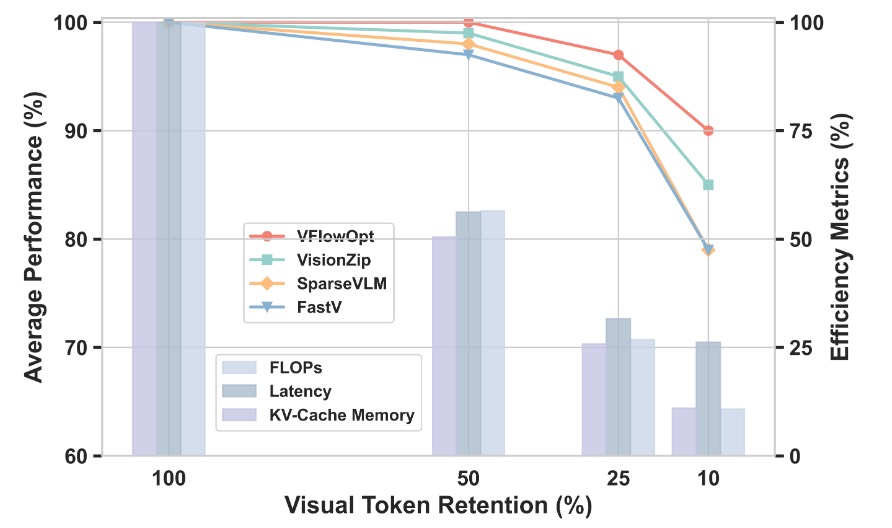

- [Aug. 2025] We release our ICCV 2025 paper VFlowOpt, a token pruning framework for accelerating VLM inference.

- [Jul. 2025] Our improved version of PointLLM, PointLLM-V2, has been accepted by TPAMI 2025! 🎉

- [Jul. 2025] We release OST-Bench, a benchmark for evaluating the capabilities of MLLMs in online spatio-temporal scene understanding! 📊

- [Jun. 2025] We release RoboMaster, a video generation method for robotic manipulation with trajectory control! 🤖

- [Jun. 2025] We release MMSI-Bench, a novel, comprehensive, fully-human-annotated, and reasoning-based benchmark for multi-image spatial intelligence! 📊

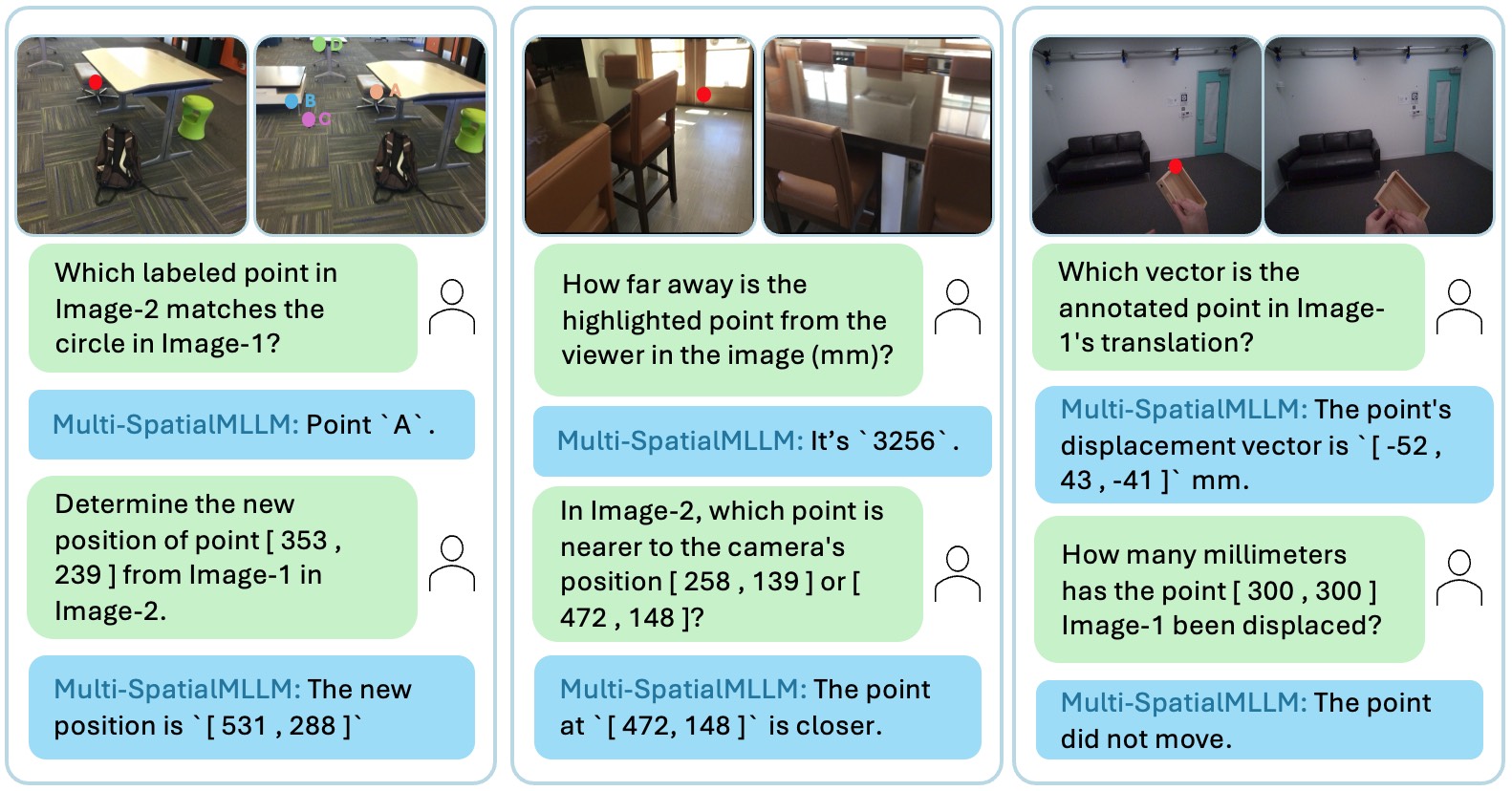

- [May. 2025] We release Multi-SpatialMLLM, a VLM capable of multi-frame spatial understanding like predicting object and camera movement vectors! 🤩

- [Dec. 2024] I completed my internship at Meta — it was an enjoyable and wonderful experience!

- [Oct. 2024] PointLLM was accepted to ECCV 2024 with all “strong accept” reviews and selected as a Best Paper Candidate! 🎉

- [Sep. 2024] Our paper VLM-Grounder, a VLM agent for zero-shot 3D visual grounding, has been accepted by CoRL 2024! 🥳

- [Sep. 2024] Our MMScan, which provides hierarchical grounded language annotations for multi-modal 3D scene understanding, and Chat-Scene, a 3D-LLM with superior scene understanding performance, were accepted by NeurIPS 2024! 🎉

- [Jun. 2024] I started my internship as a Research Scientist Intern at FAIR Perception, Meta in Menlo Park, CA. 🚀

Past News

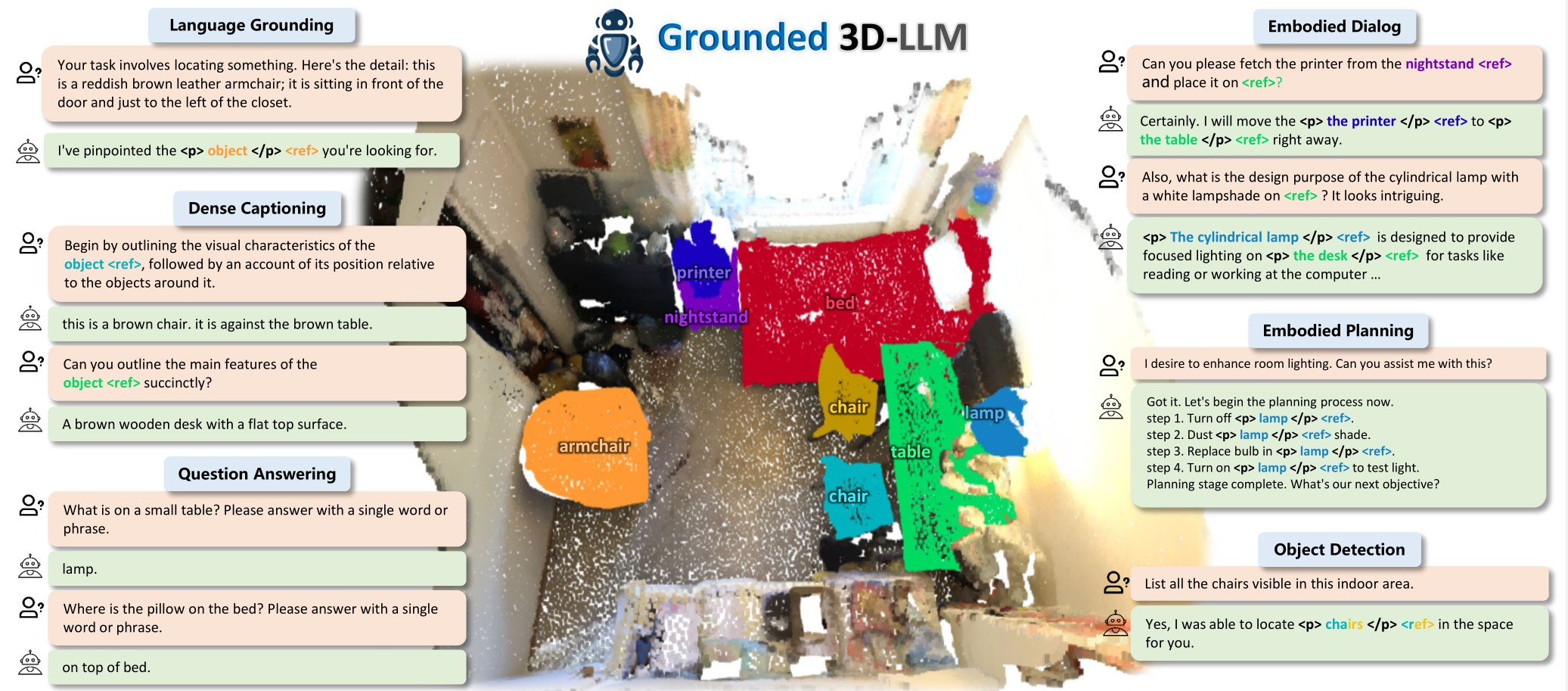

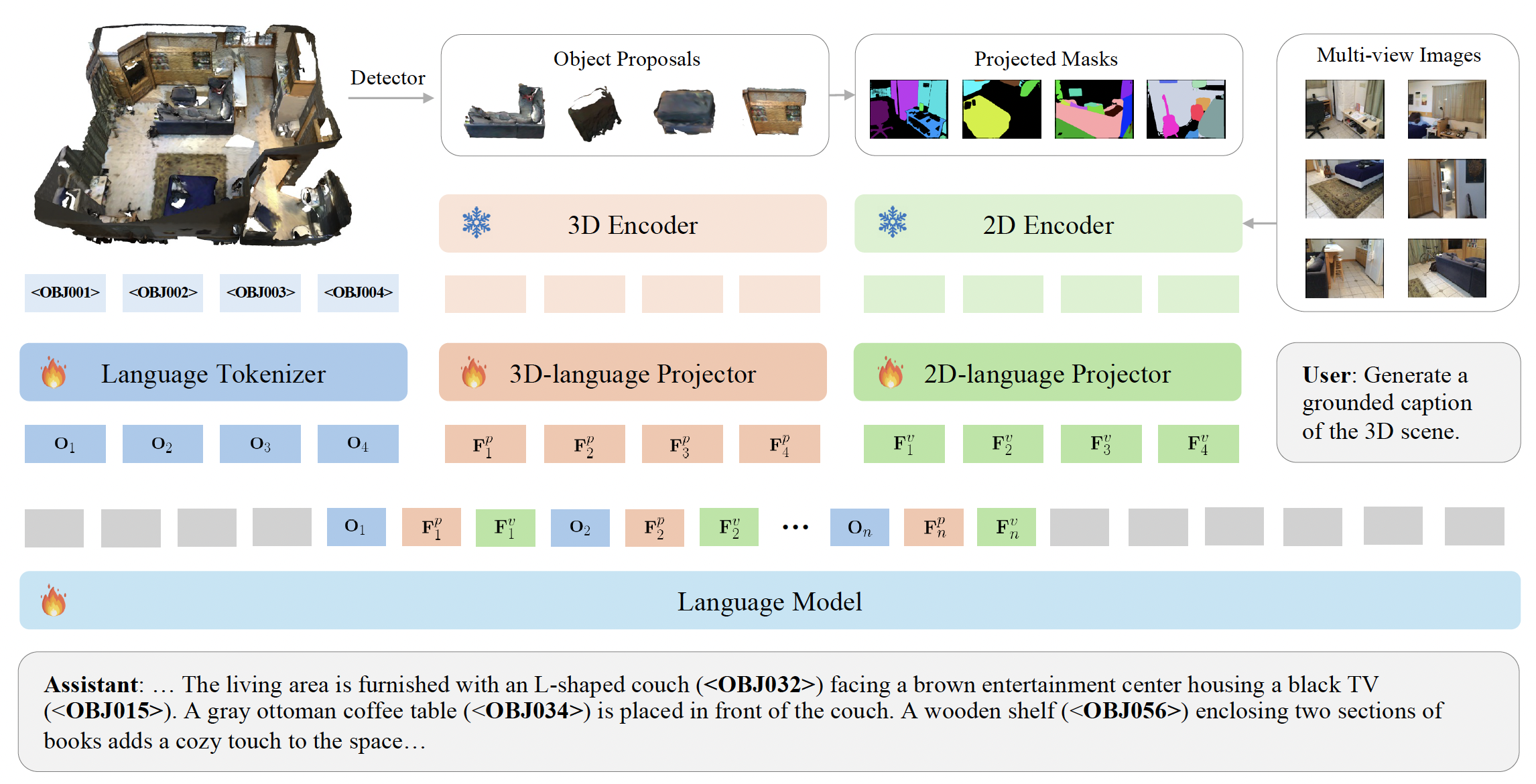

- [May. 2024] We release Grounded 3D-LLM, a 3D-LLM capable of object grounding in 3D scenes! 🤩

- [Feb. 2024] Our EmbodiedScan was accepted to CVPR 2024! It is a multi-modal 3D dataset with high-quality human annotations for embodied 3D scene perception! 🤩

- [Aug. 2023] We release PointLLM, a multi-modal large language model capable of understanding point clouds! Try our demo here. 🤗

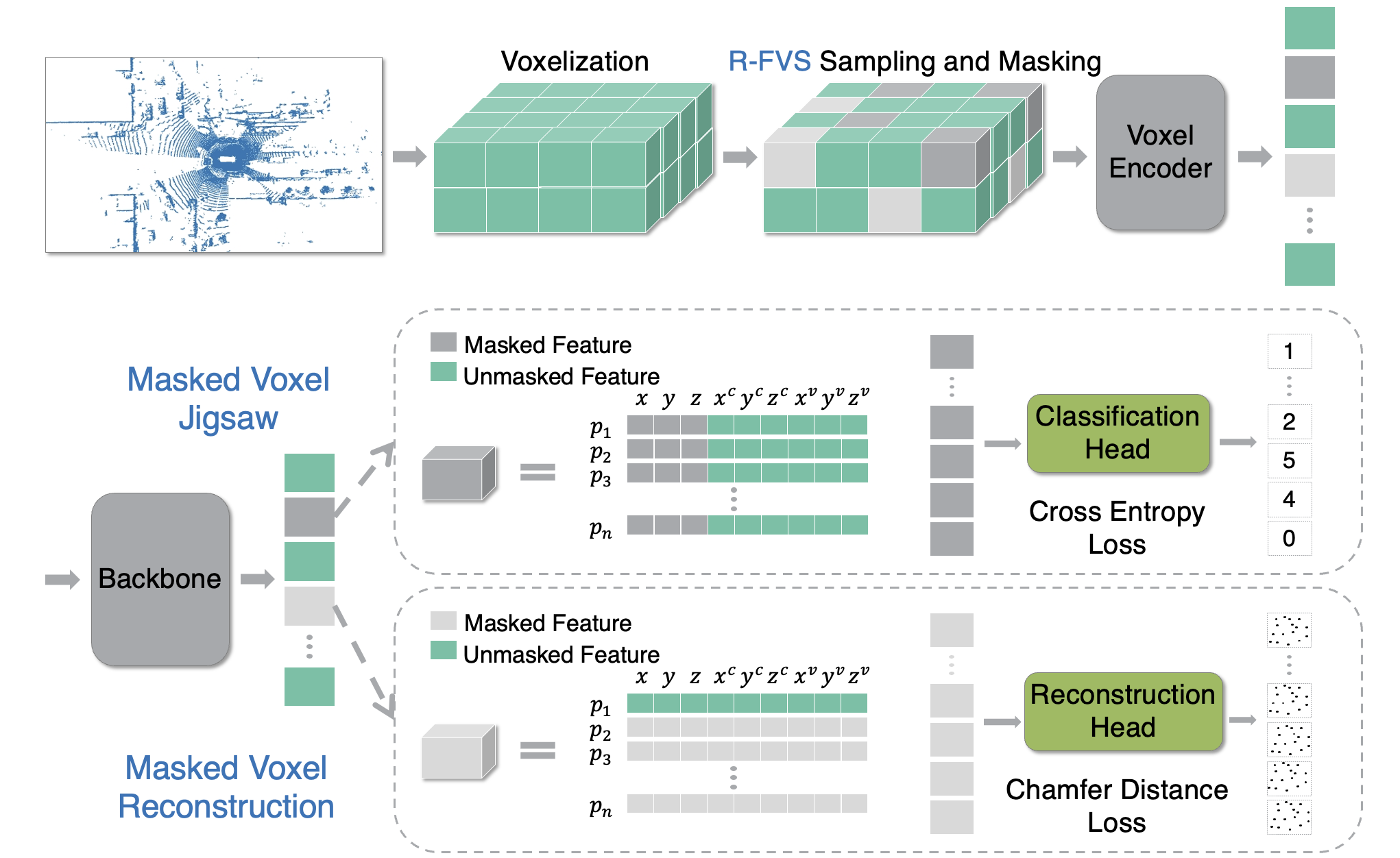

- [Mar. 2023] Our paper MV-JAR, a LiDAR-based self-supervised pre-training method along with a new data-efficient benchmark, has been accepted by CVPR 2023. 🎉

- [Jun. 2022] Graduated from Zhejiang University. Forever cherishing the memories from ZJU. 🎓

- [Apr. 2022] Honored to receive the Hong Kong PhD Fellowship (HKPFS) and the CUHK Vice-Chancellor’s PhD Scholarship. Deeply grateful for the recognition! 🏆

Education

- The Chinese University of Hong Kong (CUHK)

- August 2022 - June 2026 (Expected)

- Ph.D. in Information Engineering

- Zhejiang University (ZJU)

- September 2018 - June 2022

- B.Eng. in Computer Science and Technology

Selected Publications

* denotes equal contribution, # denotes corresponding author, † denotes project lead.

- Spatial Intelligence for LLMs/VLMs

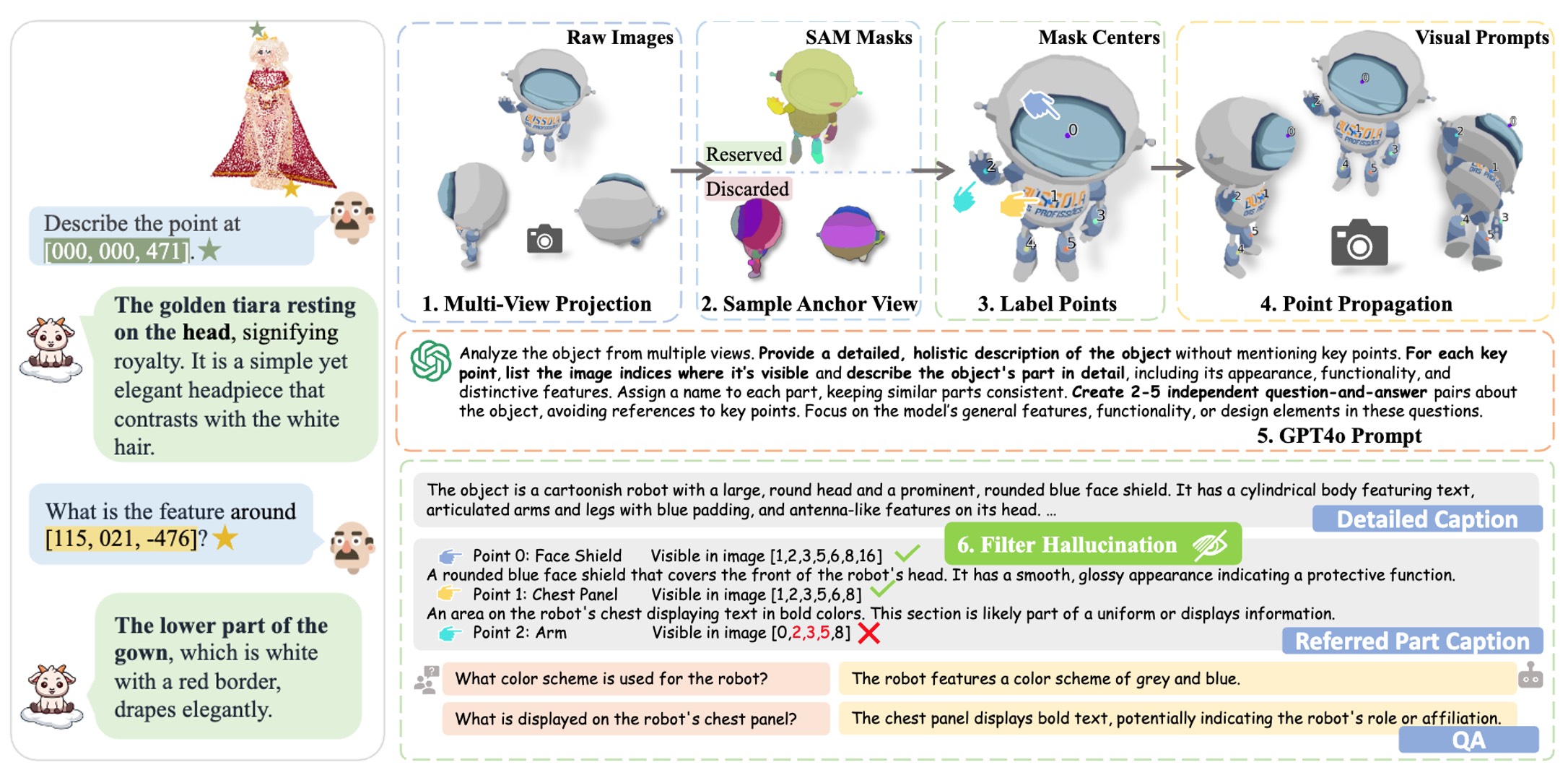

- PointLLM-V2: Empowering Large Language Models to Better Understand Point Clouds

- Runsen Xu*, Shuai Yang*, Xiaolong Wang, Tai Wang#, Yilun Chen, Jiangmiao Pang#, Dahua Lin

- Transactions on Pattern Analysis and Machine Intelligence, TPAMI 2025

- [Paper] [Code] [Project]

- MMSI-Video-Bench: A Holistic Benchmark for Video-Based Spatial Intelligence

- Jingli Lin*, Runsen Xu*†, Shaohao Zhu, Sihan Yang, Peizhou Cao, Yunlong Ran, Miao Hu, Chenming Zhu, Yiman Xie, Yilin Long, Wenbo Hu, Dahua Lin, Tai Wang#, Jiangmiao Pang#

- arXiv Preprint, 2025

- [Paper] [Code] [Project] [Dataset] [中文解读]

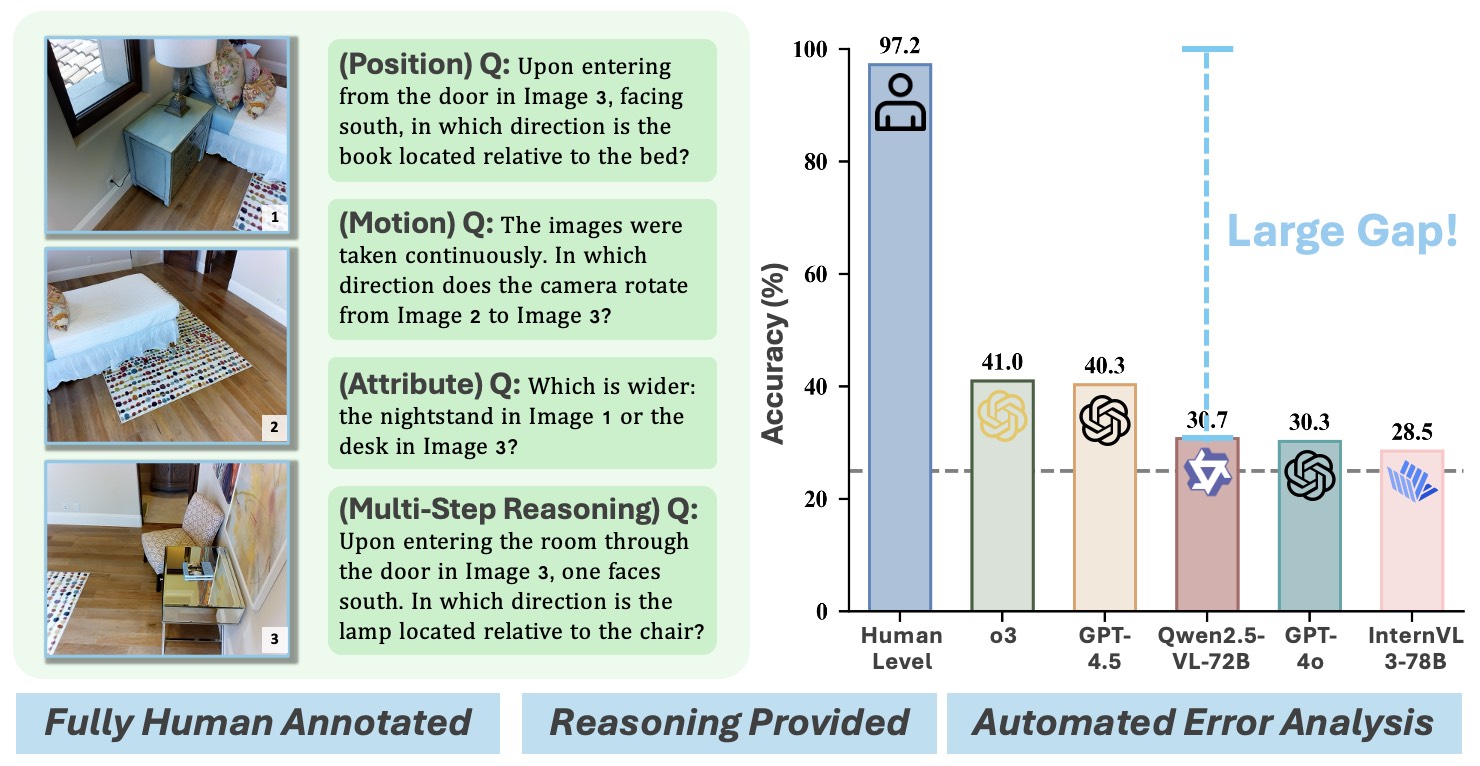

- MMSI-Bench: A Benchmark for Multi-Image Spatial Intelligence

- Sihan Yang*, Runsen Xu*†, Yiman Xie, Sizhe Yang, Mo Li, Jingli Lin, Chenming Zhu, Xiaochen Chen, Haodong Duan, Xiangyu Yue, Dahua Lin, Tai Wang#, Jiangmiao Pang#

- International Conference on Learning Representations, ICLR 2026

- [Paper] [Code] [Project] [Dataset] [中文解读]

- ChangingGrounding: 3D Visual Grounding in Changing Scenes

- Miao Hu, Zhiwei Huang, Tai Wang, Jiangmiao Pang, Dahua Lin, Nanning Zheng#, Runsen Xu#

- arXiv Preprint, 2025

- [Paper] [Code] [Project]

- OST-Bench: Evaluating the Capabilities of MLLMs in Online Spatio-Temporal Scene Understanding

- Jingli Lin*, Chenming Zhu*, Runsen Xu, Xiaohan Mao, Xihui Liu, Tai Wang#, Jiangmiao Pang#

- Neural Information Processing Systems, Datasets and Benchmarks Track, NeurIPS 2025

- [Paper] [Code] [Project] [Dataset]

- Multi-SpatialMLLM: Multi-Frame Spatial Understanding with Multi-Modal Large Language Models

- Runsen Xu, Weiyao Wang, Hao Tang, Xingyu Chen, Xiaodong Wang, Fu-Jen Chu, Dahua Lin, Matt Feiszli, Kevin J. Liang

- arXiv Preprint, 2025

- [Paper] [Code] [Project]

- Learning Video Generation for Robotic Manipulation with Collaborative Trajectory Control

- Xiao Fu, Xintao Wang, Xian Liu, Jianhong Bai, Runsen Xu, Pengfei Wan, Di Zhang, Dahua Lin

- International Conference on Learning Representations, ICLR 2026

- [Paper] [Code] [Project]

- G2VLM: Geometry Grounded Vision Language Model with Unified 3D Reconstruction and Spatial Reasoning

- Wenbo Hu*, Jingli Lin*, Yilin Long*, Yunlong Ran, Lihan Jiang, Yifan Wang, Chenming Zhu, Runsen Xu, Tai Wang†, Jiangmiao Pang†

- arXiv Preprint, 2025

- [Paper] [Code] [Project] [Model]

- VLM-Grounder: A VLM Agent for Zero-Shot 3D Visual Grounding

- Runsen Xu, Zhiwei Huang, Tai Wang, Yilun Chen, Jiangmiao Pang#, Dahua Lin

- Conference on Robot Learning, CoRL 2024

- [Paper] [Code] [Project]

- Grounded 3D-LLM with Referent Tokens

- Yilun Chen*, Shuai Yang*, Haifeng Huang*, Tai Wang, Runsen Xu, Ruiyuan Lyu, Dahua Lin, Jiangmiao Pang

- arXiv Preprint, 2024

- [Paper] [Code] [Project]

- MMScan: A Multi-Modal 3D Scene Dataset with Hierarchical Grounded Language Annotations

- Ruiyuan Lyu*, Tai Wang*, Jingli Lin*, Shuai Yang*, Xiaohan Mao, Yilun Chen, Runsen Xu, et al.

- Neural Information Processing Systems, Datasets and Benchmarks Track, NeurIPS 2024

- [Paper] [Code] [Project]

- Chat-Scene: Bridging 3D Scene and Large Language Models with Object Identifiers

- Haifeng Huang, Yilun Chen, Zehan Wang, Rongjie Huang, Runsen Xu, Tai Wang, et al.

- Neural Information Processing Systems, NeurIPS 2024

- [Paper] [Code]

- PointLLM: Empowering Large Language Models to Understand Point Clouds

- Runsen Xu, Xiaolong Wang, Tai Wang#, Yilun Chen, Jiangmiao Pang#, Dahua Lin

- European Conference on Computer Vision, ECCV 2024, Best Paper Candidate

- [Paper] [Code] [Project] [Demo] [Bilibili]

- EmbodiedScan: A Holistic Multi-Modal 3D Perception Suite Towards Embodied AI

- Tai Wang*, Xiaohan Mao*, Chenming Zhu*, Runsen Xu, et al.

- Computer Vision and Pattern Recognition, CVPR 2024

- [Paper] [Code] [Project] [中文解读]

- General VLMs

- VFlowOpt: A Token Pruning Framework for LMMs with Visual Information Flow-Guided Optimization

- Sihan Yang, Runsen Xu, Chenhang Cui, Tai Wang#, Dahua Lin, Jiangmiao Pang#

- International Conference on Computer Vision, ICCV 2025

- [Paper] [Code]

- Self-Supervised 3D Representation Learning

- MV-JAR: Masked Voxel Jigsaw and Reconstruction for LiDAR-Based Self-Supervised Pre-Training

- Runsen Xu, Tai Wang, Wenwei Zhang, Runjian Chen, Jinkun Cao, Jiangmiao Pang#, Dahua Lin

- Computer Vision and Pattern Recognition, CVPR 2023

- [Paper] [Code] [Video] [Slides]

- COˆ3: Cooperative Unsupervised 3D Representation Learning for Autonomous Driving

- Runjian Chen, Yao Mu, Runsen Xu, Wenqi Shao, Chenhan Jiang, Hang Xu, Zhenguo Li, Ping Luo

- International Conference on Learning Representations, ICLR 2023

- [Paper] [Code]

- Robot Localization and Navigation

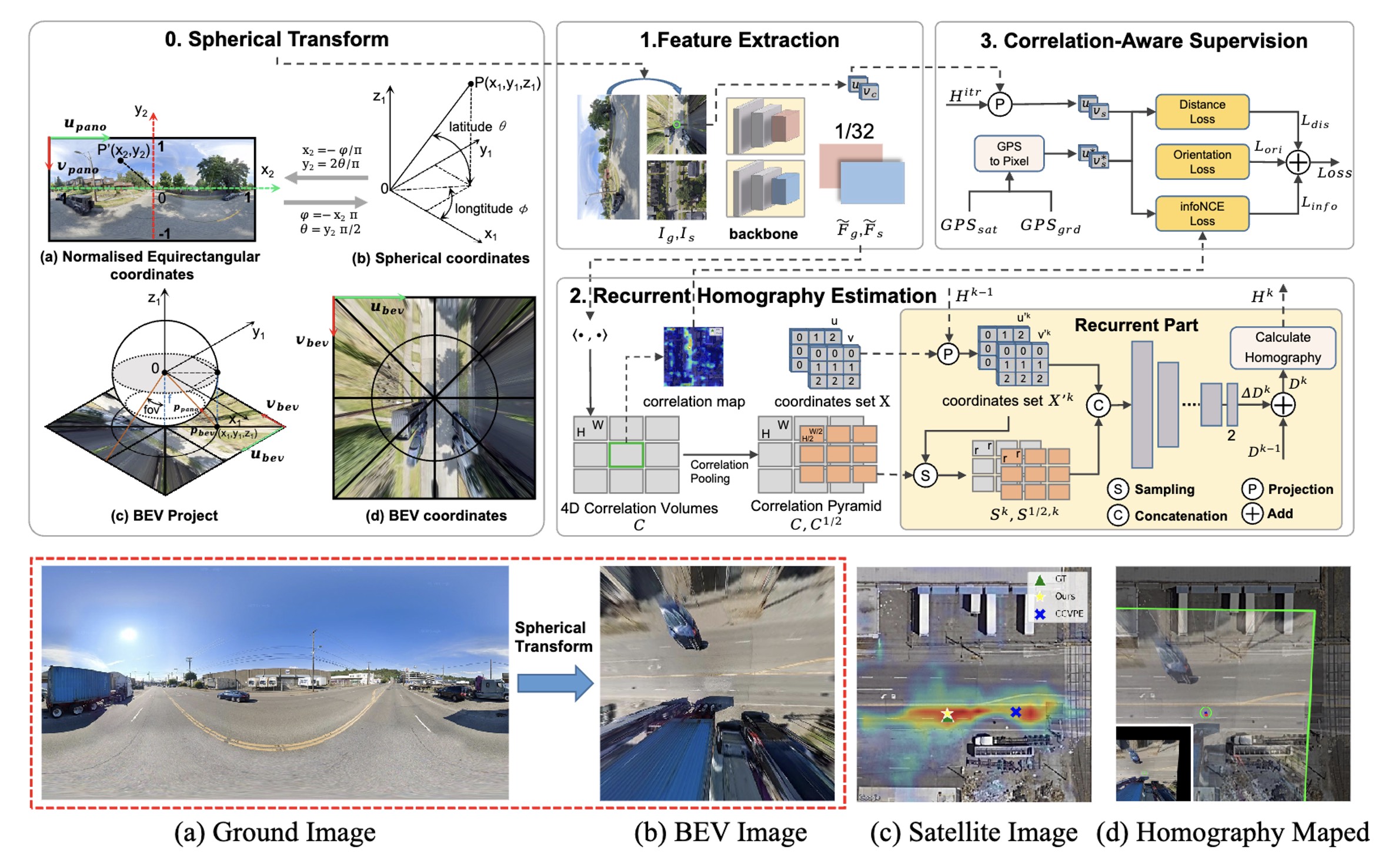

- Fine-Grained Cross-View Geo-Localization Using a Correlation-Aware Homography Estimator

- Xiaolong Wang, Runsen Xu, Zuofan Cui, Zeyu Wan, Yu Zhang

- Neural Information Processing Systems, NeurIPS 2023

- [Paper] [Code] [Demo]

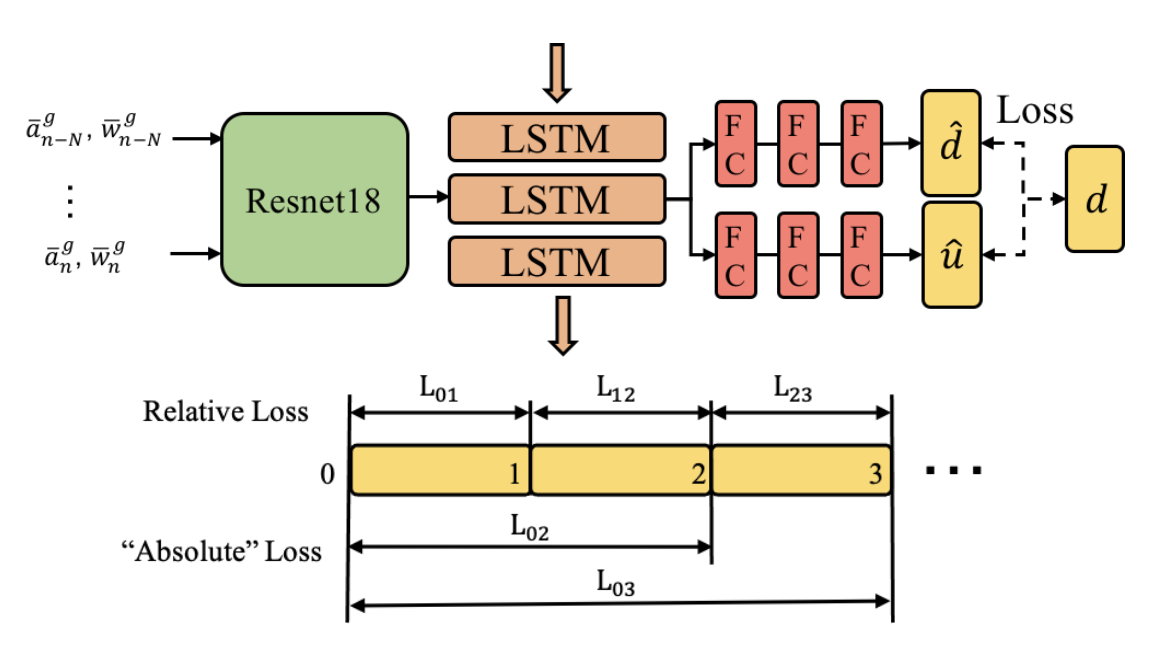

- RNIN-VIO: Robust Neural Inertial Navigation Aided Visual-Inertial Odometry in Challenging Scenes

- Danpeng Chen, Nan Wang, Runsen Xu, Weijian Xie, Hujun Bao, Guofeng Zhang

- International Symposium on Mixed and Augmented Reality, ISMAR 2021, Oral Presentation

- [Paper] [Code] [Project]

Selected Awards

- ECCV Best Paper Candidate, 2024

- Hong Kong PhD Fellowship (the most prestigious scholarship for Ph.D. studies in Hong Kong), 2022

- CUHK Vice-Chancellor’s PhD Scholarship, 2022

- Outstanding Graduates of Zhejiang University, 2022

- Outstanding Undergraduate Thesis of College of Computer Science and Technology, Zhejiang University, 2022

- National Scholarship (highest honor nationwide for Chinese undergraduates), 2019

Academic Services

- Reviewer: CVPR, ICCV, ECCV, NeurIPS, ICML, ICLR, ACM MM, ICRA, etc.

Teaching

- IERG4998: Final Year Project, Spring 2023

- IERG4998: Final Year Project, Fall 2022